(Originally published at Ribbonfarm.)

Here's a recipe for discovering new ideas:

- Examine the frames that give structure (but also bias) to your thinking.

- Predict, on the basis of #1, where you're likely to have blind spots.

- Start groping around in those areas.

If you can do this with the very deepest frames — those that constrain not just your own thinking, but your entire civilization's — you can potentially unearth a treasure trove of insight. You may not find anything 100% original (ideas that literally no one else has ever seen), but whatever you find is almost guaranteed to be underappreciated.

In his lecture series The Tao of Philosophy, Alan Watts sets out to do just this for Western civilization. He wants to examine the very substrate of our thinking, in order to understand and correct for our biases.

So what is the substrate of Western thought?

Well if you're a fish, water can be hard to see. Same thing here. In order to see our own thinking, we'll have to triangulate it from the outside.

Watts does this by using the Chinese mindset as a foil for the Western one. He argues that the main cognitive difference lies in the preferred metaphor each of these cultures use to make sense of the world.

Westerners, he says, prefer to understand things as mechanisms, while the Chinese prefer to understand things as organisms — and these are two very different kinds of processes.

Mechanical vs. organic thinking

So what's the difference?

Mechanisms come together by assembly, when a creator arranges raw material in just the right way to serve a particular purpose. They're typically made out of discrete parts, each with its own purpose that fits into the overall structure. And they're cleanly-factored and globally-optimized: the result of a far-sighted design process.

Organisms, on the other hand, aren't assembled from the outside. Instead they start out small and simple, and expand outward while gradually complicating themselves. In other words, they grow. The result is a hacky mess of tangled parts, vestiges, fuzzy boundaries, and overlapping purposes: the hazards of a short-sighted, local optimization process.

"What is it for?" and "How does it work?" are the essential questions to ask about a mechanism. "What is its nature?" is the question to ask about an organism — a way of coping with its illegible complexity.

Another way to highlight the difference is to take a page out of feminist theory. We can characterize mechanisms as objects, which exist mostly to enact the desires and wills of their creators, versus organisms as agents/subjects, with desires and wills of their own.

Now let's illustrate with three quick examples.

Creation myths. The story told in Genesis is an exemplar of the Western mechanical mindset. God assembles everything piece by piece out of raw materials, very purposefully, then brings Adam to life by breathing into his nostrils. Here God is portrayed as the Celestial Engineer, and the universe, his animatronic invention.

The Chinese, as if to provide a dramatic contrast, have no creation myth. (OK, this is a slight exaggeration, but the gist is true enough.) The Chinese view of the universe is that of spontaneous growth, without any external agency or overarching purpose. "Nature has no boss" — and therefore no role for a Creator. It's just the physical world, doing what it does: falling forward forever.

The human body. The Western view of the human body is rooted in anatomy. We see the body as an assemblage of parts, each with its own purpose. On the other hand,

The tendency of Chinese thought is to seek out dynamic functional activity rather than to look for the fixed somatic structures that perform the activities. Because of this, the Chinese have no system of anatomy comparable to that of the West. — Ted Kaptchuk, via Wikipedia (emphasis mine)

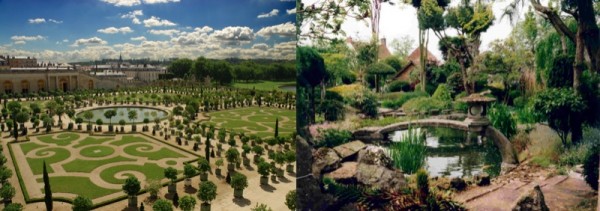

Gardens. This isn't a particularly important example of the different kinds of thinking, but it is vivid. Notice how the West prefers an "assembled" look even in its arrangement of nature:

So that's the main difference. The West tends to see purposeful design where the Chinese see spontaneous growth. This is the key to understanding both the strength and weakness of the Western worldview.

Darwin's refactor

Darwin's theory of evolution is arguably the lynchpin of Western thought.

Essentially it gave us license to use our preferred (mechanical) mindset in trying to explain the natural world, but without the embarrassment of having to invoke God. Specifically, Darwin said it's OK to treat living things as designed artifacts — as mechanisms assembled for a purpose — as long as it's not God's design or God's purpose but the Blind Watchmaker's.

Now this was an amazing breakthrough, and it precipitated an explosion of insight. Darwin deserves all the praise we care to lavish on him. Unfortunately, in our exuberance, we've largely failed to notice that he left our worldview in an inconsistent state, full of dangling references.

Remember this is a legacy worldview. We'd been building all sorts of dependencies on the idea of God. You can't just s/God/evolution/ and expect a 3000-year-old codebase to compile.

What do I mean? I mean we're still dualists. We still think mind is separate from matter. (Even where we've learned to say otherwise, we still act as if it's true.) In our hearts we still hope that consciousness is somehow ontologically separate from matter, just as we still fancy that humans are in an ontological class above the animals. We still imagine ourselves as souls, i.e., as homunculi: little people sitting inside our heads, controlling our vehicular bodies. (Notice how we say, "I have a body," rather than, "I am a body.") We still pine for a version of free will that gives us uncaused causality, prime movement, a little slice of divinity. Some of us even hold out hope that quantum mechanics will miraculously justify these prejudices — but by all accounts no such miracle is coming. And so again and again we fail to appreciate the organic nature of things, because although we've exorcised the ghost, we're still left with a machine.

Specifically, we're limited in how we see ourselves and our creations because we intuitively reject the idea that we are natural organisms. We insist on treating our minds as subjects, while simultaneously (and without fully realizing it) treating our bodies as mechanical objects.

Whenever we try to "reduce" our minds (and their creations) to natural processes, it comes out sounding strange or surprising:

- "Our societies belong to the same general category as the societies of wolves or ants — groups of animals living together, interacting and depending on each other for their survival." — Axel Kristinsson

- Intelligence, like beauty, is a property of the human physical organism. — paraphrased from Mills Baker

- "[Religions] are natural entities, like atomic systems, molecular systems, organ systems, neural systems, and the rest, which have emerged by natural causes in the creative process of cosmic evolution." — Loyal Rue

- Referring to humans: "They're made out of meat... thinking meat." — Terry Bisson

- "For many people 'nature' means the birds, the bees, and the flowers. It means everything that is not 'artificial'.... The 'natural' state of the human being is to be naked — but we wear clothes, and that's 'artificial.' We build houses, but is there any difference between a human house and a wasps' nest or a bird's nest?" — Alan Watts

These statements sound strange, and yet they're straightforward corollaries of what we know to be true about the world — from science.

"Surprise is the measure of a poor hypothesis," says Eliezer Yudkowsky. "A good model makes reality look normal, not weird."

So if science sounds strange, it points to something wrong with our intuitions. The problem is that while God is dead, we haven't finished refactoring our worldview.

The antidote

And now the antidote: an exercise in organic thinking.

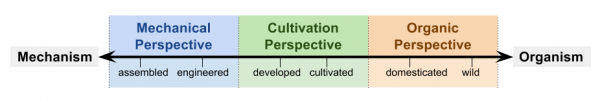

I invite you to consider mechanisms and organisms not as fundamentally different kinds of entities, but as two poles at opposite ends of a spectrum:

What this means is that mechanisms and organisms are really the same kind of thing. They're both just arrangements of matter. It's therefore not a category error to say that a human is a machine (or that a computer is an organism). It just depends on what perspective you want to take.

It's like analyzing a business decision. You might wonder if it was a "tactical" or a "strategic" decision. The answer is probably both, though it may have been dominated by one or the other. But you can still ask, of even the purest "strategic" decision, what "tactical" factors went into it.

In the same way, it's often productive to ask about the "mechanical" properties of things that are clearly "organisms," or vice versa. If someone is doing surgery on me, for example, I'm happy that he can draw on the mechanical perspective of the human body, even though I am fundamentally an organism.

But generally, here in the West, our problem is that we tend to favor the mechanical perspective to the exclusion of the organic perspective. This is true regardless of the type of objects we're considering — whether they're on the organic or mechanical side of the spectrum.

And it follows that the prescription (for counteracting our bias) would be to take the organic perspective as often as possible. In the rest of this essay, then, I'd like to show some examples of how to use the organic perspective, and the kind of insight that can result from it.

Government

The Western bias is to treat governments as the engineered artifices of human design — a folly no better illustrated than in the West's many disasters at third-party state-building over the past hundred years.

(In America this folly may be understood, if not forgiven, in that our government was intentionally designed, nearly from scratch. It's just that this isn't the case with other countries.)

We should therefore spend more time trying to understand governments as organisms. As natural phenomena that arise in all human societies, taking similar form at similar scales. As independent processes with their own agency. As creatures who aren't controlled by humans, but who rather use humans as tools to achieve their own purposes.

If we imagine government as an organism — as something "wild" or in need of "domestication" — we get Hobbes's Leviathan, along with the libertarian critique of government as a parasite growing on top of our societies.

If we imagine government as an already-domesticated organism in need of "cultivation" — well for one, we'd get a far healthier model for how to practice third-party state-building. We would immediately think to ask about Afghanistan (say) whether its institutional soil is rich enough to support the kind of government we're attempting to plant there.

It's not that government can't be construed as an intentionally-designed mechanism. It's just we should round out our understanding of it by occasionally treating it as an organism — as Venkat did in excellent detail a couple weeks ago.

Technology

If Darwin's insight was to show that it's productive to treat organisms as mechanisms, then there's a complementary/opposite insight arguing that it's productive to treat mechanisms (technology) as organisms.

This is Kevin Kelly's insight, articulated in his books, Out of Control and What Technology Wants.

His point is that tech artifacts can be understood, in a sense, as living creatures. They can move around, metabolize energy, even replicate themselves. Sure they need our interventions to accomplish most of these things (for now), but biological life needs help from its environment too. No creature is an island.

Moreover, technology evolves just as life does. In fact, life and technology have been interrelated processes for all of human history, symbionts locked in a coevolutionary tango, bootstrapping each other ever upward in complexity.

Today most of the things we call "technology" are assembled in factories, as part of a process over which we command breathtaking precision. So it's easy to forget that some of the most important early technologies were truly wild things that we had to domesticate for our own purposes. First we domesticated fire, then wild grains for agriculture, then animals. After struggling against rivers for thousands of years, we finally managed to tame them too.

It might sound silly, but there's a sense in which even rocks needed to be domesticated. We have a stereotype that rocks are lifeless, inert — just lying around waiting to yield to our will. But there's also something stubborn and intransigent about them, and it took untold generations of our ancestors to break their 'will' and bend them to our own.

When we imagine technology as something "wild," something that grows on its own, then we're prompted to think about grey goo, Unfriendly AI, and Ted Kaczynski's critique. A natural reaction, from this perspective, is to think that if technology will continue to grow on its own, out of our control, then we need to act first and kill it (or subdue it) before it's too late.

Whatever you think of these arguments and proposed solutions, they're worth hearing out.

Software

Here's what no one tells you when you graduate with a CS degree and take up a job in software engineering:

The computer is a machine, but a codebase is an organism.

This should make sense to anyone who's worked on a large software project. Computer science is all about controlling the machine — making it do what you want, on the time scale of nano- and milliseconds. But software engineering is more than that. It's also about nurturing a codebase — keeping it healthy (low entropy) as it evolves, on a time scale of months and years.

Like any organism, a codebase will experience both growth and decay, and much of the art of software development lies in learning to manage these two forces.

Growth, for example, isn't an unmitigated good. Clearly a project needs to grow in order to become valuable, but unfettered growth can be a big problem. In particular, a codebase tends to grow opportunistically, by means of short-sighted local optimizations. And the bigger it gets, the more 'volume' it has to maintain against the forces of entropy. Left to its own devices, then, a codebase will quickly devolve into an unmanageable mess, and can easily collapse under its own weight.

Thus any engineer worth her salt soon learns to be paranoid of code growth. She assumes, correctly, that whenever she ceases to be vigilant, the code will get itself into trouble. She knows, for example, that two modules tend to grow ever more dependent on each other unless separated by hard ('physical') boundaries.

(Of course all code changes are introduced by people, by programmers. It's just a useful shortcut to pretend that the code has its own agenda.)

Faced with the necessity but also the dangers of growth, the seasoned engineer seeks a balance between nurture and discipline. She can't be too permissive — coddled code won't learn its boundaries. But she also knows not to be too tyrannical. Code needs some freedom to grow at the optimal rate.

She also understands how to manage code decay. She has a good 'nose' for code smells: hints that a piece of code is about to take a turn for the worse. She knows about code rot, which is what happens when code doesn't get enough testing/execution/exercise. (Use it or lose it, as they say.) She's seen how bad APIs can metastasize across the codebase like a cancer. She even knows when to amputate a large piece of code. Better it should die than continue to bog down the rest of the project.

Bottom line: building software isn't like assembling a car. In terms of growth management, it's more like raising a child or tending a garden. In terms of decay, it's like caring for a sick patient.

And all of these metaphors help explain why you shouldn't build software using the factory model.

The brain

And finally we come to the brain.

For years I've been a strong advocate of the computational model of the brain, but more recently I've started to worry that it's doing us a disservice. It's not that the brain isn't a computer — in fact it can be extremely productive to model it that way. It's just that we may have taken this model too far, to the point where we're now locked in the mechanical perspective.

When applied to the brain, the mechanical perspective tells us that all we need to do is map parts to purposes and we'll have it all figured out. But if we've learned anything from this essay, we should be skeptical that the brain will yield to such a mechanically-minded reverse-engineering effort.

Why? Well the brain is an organ, literally part of an organism. It's something that grows (by expanding outward while gradually complicating itself). If it ends up in a weird or wrong configuration, it's because of a growth mistake (which is very different from an assembly mistake). Its 'parts', such as they are, aren't discrete and separable, but rather tangled, tightly-coupled, and hacked together, and they serve multiple purposes simultaneously — the predictable result of having evolved through a sequence of local optimizations. Even the material out of which the brain is built — neurons — are themselves better modeled as organisms than as mechanisms.

As Dan Dennett says,

We're beginning to come to grips with the idea that your brain is not this well-organized hierarchical control system where everything is in order, a very dramatic vision of bureaucracy.... We're getting away from the rigidity of that model.

So we would almost certainly benefit from switching, at least on occasion, to the organic perspective.

We might start to wonder, then, what it would be like to treat the 'self' not as a feature of our brains but instead as a growth. We'd be prompted to ask questions like:

In what environment does a self grow best? What conditions make it stronger or weaker? What does it grow in response to? What does it compete with (or trade off against) as it grows?

Could the self be like a parasite? A virus? Is it contagious? How might the presence of a self in one mind (e.g. a parent) induce one to grow in another mind (e.g. a child)?

What else besides the self might be growing in the brain?

Yes, we can still ask mechanical-mindset questions like "What is the purpose of the self?" or "How does it work?" These are the questions Darwin showed us how to ask (and answer) — and I'm not trying to deny their importance. I'm just saying they need to be complemented by a different set of questions.

Final thought

Stepping back for a moment, it's odd that I'm even having to make this argument. Our thinking has been so dominated by the Western mechanical mindset that we've forgotten how to treat the brain — an organ — as an organism. Of course the Western mindset is dominant in part because it's been so successful on so many hard problems. But it constrains our thinking all the same.

If we want to finish the refactoring project Darwin started 150 years ago — to fix up the holes left by removing God from our worldview — we can't just continue to apply mechanical thinking. If we do, it will seem like we're making progress, but eventually we'll reach a dead end where it becomes clear (if it hasn't already) that there are deeper structural problems, and further opportunistic fixes won't get the thing to compile. Better to stop now and go a little against the grain, revisit a few of our long-held and little-questioned assumptions, and hope we won't have to do as much backtracking in the future.

Melting Asphalt

Melting Asphalt